I’ve been following the US Air Force’s Gorgon Stare project with great interest over the past couple of years. It’s about to become operational aboard the USAF’s MQ-9 Reaper unmanned aerial vehicles. Defense Technology International reports:

Gorgon Stare was conceived, designed and developed in less than three years by prime contractor Sierra Nevada Corp. … It offers an exponential expansion in the scope, amount, quality and distribution of video provided to ground troops, manned aircraft crews, ISR processing centers and others …

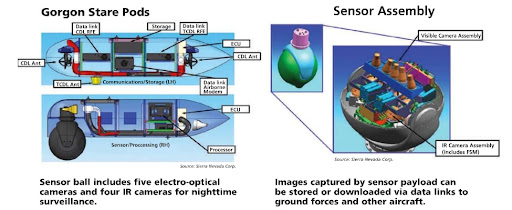

Gorgon Stare’s payload is contained in two pods slightly larger than, but about the same total weight as the two 500lb. GBU-12 laser-guided bombs the Reaper carries. The pods attach to the inside weapons racks under the wing.

One pod carries a sensor ball produced by subcontractor ITT Defense that protrudes from the pod’s bottom. The ball contains five electro-optical (EO) cameras for daytime and four infrared (IR) cameras for nighttime ISR, positioned at different angles for maximum ground coverage. The pod also houses a computer processor. The cameras shoot motion video at 2 frames/sec., as opposed to full-motion video at 30 frames/sec. The five EO cameras each shoot two 16-megapixel frames/sec., which are stitched together by the computer to create an 80-megapixel image. The four IR cameras combined shoot the equivalent of two 32-megapixel frames/sec. The second Gorgon Stare pod contains a computer to process and store images, data-link modem, two pairs of Common Data Link and Tactical Common Data Link antennas, plus radio frequency equipment.

Gorgon Stare pods (click the image for a larger view)

Gorgon Stare is operated independently but in coordination with the Reaper’s crew by a two-member team working from a dedicated ground station, which fits on the back of a Humvee. A second Humvee carries a generator and spare parts. A separate, forward-deployed processing, exploitation and dissemination team co-located with the Gorgon Stare ground station coordinates with commanders in-theater, directing the system’s sensors and exploiting their imagery in real time.

The result is a system that offers a ‘many orders of magnitude’ leap beyond the ‘soda straw’ view provided by the single EO/IR camera carried by an MQ-1 Predator or a conventional Reaper UAV … The video taken by Gorgon Stare’s cameras can be ‘chipped out’ into 10 individual views and streamed to that many recipients or more via the Tactical Common Data Link (TCDL). Any ground or airborne unit within range of Gorgon Stare’s TCDL and equipped with [an appropriate receiver] can view one of the chip-outs.

At the same time, Gorgon Stare will process the images from all its cameras in flight, quilting them into a mosaic for a single wide-area view. That image can be streamed to tactical operations centers or Air Force Distributed Common Ground System intelligence facilities by the Gorgon Stare ground station via line-of-sight data link. The ground station team, which will control the system’s sensors, can also transmit the relatively low-resolution wide-area view to recipients in-theater or elsewhere via other wideband communication devices, plus chip-out an additional 50-60 views and forward them as needed.

Gorgon Stare’s coverage area is classified but, as stated, considerably bigger than that provided by a single EO/IR camera … “Instead of looking at a truck or a house, you can look at an entire village or a small city.” Moreover, Gorgon Stare’s computers will store all imagery its cameras capture on a single mission, allowing the data to be transferred for exploitation after landing.

There’s much more at the link. (If the page doesn’t load correctly, you can find another version of the same article here.)

Gorgon Stare promises to be a major advance over current sensor technology, as deployed on MQ-1 and MQ-9 UAV’s. However, it’s only an interim solution. The Defense Advanced Research Projects Agency (DARPA) is developing something far more powerful: the Autonomous Real-time Ground Ubiquitous Surveillance – Imaging System (ARGUS-IS). It’s described as follows:

The technical emphasis of the program is on the development of the three subsystems; a 1.8 Gigapixels video sensor, an airborne processing subsystem, and a ground processing subsystem; that will be integrated together to from ARGUS-IS. The 1.8 Gigapixel video sensor produces more than 27 Gigapixels per second running at a frame rate of 15 Hz. The airborne processing subsystem is modular and scalable providing more than 10 TeraOPS of processing. The Gigapixel sensor subsystem and airborne processing subsystem will be integrated into the A-160 Hummingbird, an unmanned air vehicle for flight testing and demonstrations. The ground processing subsystem will record and display information down linked from the airborne subsystem. The first application that will be embedded into the airborne processing subsystem is a video window capability. In this application, users from the ground will be able to select a minimum of 65 independent video windows throughout the field of view. The video windows running at the sensor frame rates will be down linked to the ground in real-time. Video windows can be utilized to automatically track multiple targets as well a providing improved situational awareness. A second application is to provide a real-time moving target indicator for vehicles throughout the entire field of view in real-time.

ARGUS-IS will be as much of an improvement over Gorgon Stare as the latter is over current sensor technology.

However, these advances are themselves posing headaches for those using the data provided by such systems. The Washington Times reported yesterday:

The U.S. military is fast running out of human analysts to process the vast amounts of video footage collected by the robotic planes and aerial sensors that blanket Afghanistan and other fronts in the war on terrorism.

Speaking at an intelligence conference last week, Marine Corps Gen. James E. Cartwright, vice chairman of the Joint Chiefs of Staff, said he would need 2,000 analysts to process the video feeds collected by a single Predator drone aircraft fitted with next-generation sensors.

“If we do scores of targets off of a single [sensor], I now have run into a problem of generating analysts that I can’t solve,” he said, adding that he already needs 19 analysts to process video feeds from a single Predator using current sensor technology.

. . .

It’s a classic conundrum for U.S. intelligence: Information-gathering technology has far outpaced the ability of computer programs — much less humans — to make sense of the data.

Just as the National Security Agency needed to develop computer programs to data-mine vast amounts of telephone calls, Web traffic and e-mails on the fiber-optic networks it began intercepting after Sept. 11, 2001, the military today is seeking computer programs to help it sort through hours of uneventful video footage recorded on the bottom of pilotless aircraft to find the telltale signs of a terrorist the drone is targeting.

. . .

Gen. Cartwright said he gets “love notes” from Army Gen. David H. Petraeus, the commander of coalition forces in Afghanistan, saying he has an 800 percent shortfall of ISR assets.

Air Force Lt. Gen. John C. Koziol, director of the ISR task force, told the intelligence conference last week that he was looking to get new products out to the battlefield within a year of issuing contracts. He also stressed that he needed computer filters to help his analysts sift through all the video feeds.

“We don’t have the time to try to discover data anymore,” Gen. Koziol said. “Especially when we are putting wide-area surveillance into country rapidly right now. We have to increase discoverability. We have to allow that analyst sitting on the ground looking at this massive amount of data, he or she does not have time to [sift] through all this stuff.”

. . .

Noah Shachtman, a nonresident fellow at the Brookings Institution and editor of Wired’s Danger Room, said the problem facing the military is similar to that faced by large cities that have installed cameras on traffic lights and telephone poles to prevent crime.

“The problem is that the more sensors you put up there, the more analysts you need,” he said. “Ninety percent of the footage will be useless. The problem is, you don’t know which 10 percent will be important. Just having someone stare at it, bleary-eyed and slack-jawed, is a huge waste of time.”

Again, there’s more at the link.

We’re in a fascinating period of technological development. Already we can do things that even a decade ago were the stuff of science-fiction writers’ imaginations; and we’ll do a great deal more in the decade ahead . . . but the human factor is proving unable to keep up with the technology. Could it be that we’re drowning our anti-terrorism efforts in a sea of data so vast that it’s beyond timely analysis and/or comprehension? Is this why Osama bin Laden and his fellow leaders of Al Qaeda are still uncaptured and unpunished for their crimes?

All the same, I’m sure our combat troops will welcome the new light that’s about to be shone on their operations. I wish we’d had this sort of thing when I was swanning around on patrol in the African bush. It would have helped enormously to be able to detect ambushes, enemy movements, etc. well before we landed up in the middle of them!

Peter

As if they weren't tempting enough targets as it is… This is a great idea against an unsophisticated enemy, but against any force with remotely capable air defenses these are going to be very close to the top of the list.

Anyway, if they're useful now to the guys on the ground then I'm happy to see them.

Jim

Parallel development: BAE's ARGUS (Autonomous Real-time Ground Ubiquitous Surveillance)

http://dronewarsuk.wordpress.com/2010/09/09/out-staring-the-gorgon-bae-drones-and-argus/

The air-to-ground equivalent of AN/AAQ-37 EO DAS?

In near future, such technology can potentially become the A/A, A/G "eye" of autonomous UCAV.